The Reviewed Paper: Preserving Differential Privacy in Convolutional Deep Belief Networks

(💐Authors: Nhat Hai Phan, Xintao Wu, Dejing Dou)

As you may have noticed, we have been hosting OpenMined's Paper Session Events by August. The paper we have covered in August focuses on differential privacy for Convolutional Deep Belief Network (CDBN).

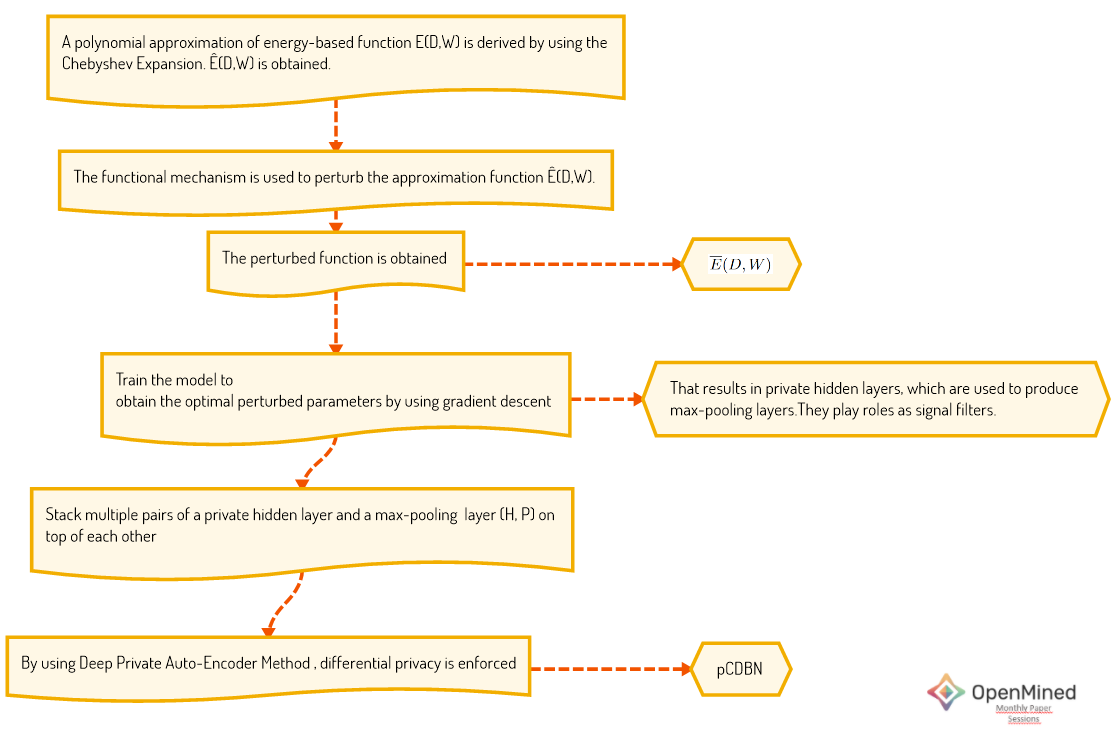

In this paper, the main idea from Phan et al. is to enforce Ɛ-differential by using the functional mechanism to perturb the energy-based objective functions of traditional CDBNs, instead of their results. They employ the functional mechanism to perturb the objective function by injecting Laplace noise into its polynomial coefficients.

The key contribution of the paper is that the proposal of using Chebyshev expansion to derive the approximate polynomial representation of objective functions. The authors show that they can further derive the sensitivity and error bounds of the approximate polynomial representation, which means preserving differential privacy in CDBNs is feasible.

Phan et al. emphasize some studies in the literature. The literature review begins with the progress of deep learning in the healthcare and expresses the need for privacy with regards to deep learning due to the fact that healthcare data is private and sensitive. In the study, Ɛ-differential privacy approach is used to protect privacy.

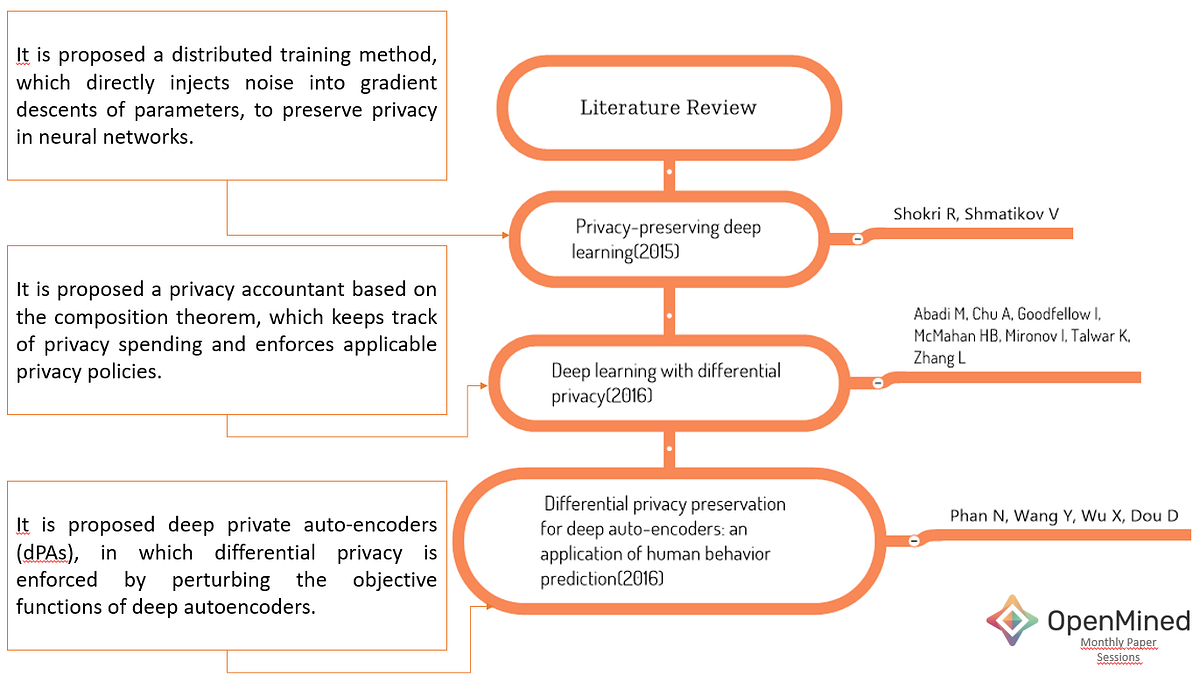

Looking at the literature, several major studies stand out (please see Fig.1):

📌Shokri et. al. implemented DP by adding noise into gradient descents of parameters. Even if this method is attractive for deep learning applications on mobile devices, it consumes a large amount of privacy budget to provide model accuracy. It is because of a large number of training epochs and a large number of parameters[1].

📌To improve this challenge, Abadi et. al proposed a privacy accountant based on the composition theorem. But the challenge on the dependency of training epoch number remains unresolved[2].

📌Phan et. al proposed an approach independent of training epoch number called deep private auto-encoders (dPAs). They enforced differential privacy by perturbing the objective functions of deep autoencoders[3].

According to the authors, the following two points urgently require further research under development of a privacy-preserving framework:

- Independency of the number of training epochs in consuming privacy budget;

- Applicability in typical energy-based deep neural networks

Freshen up What We Know

In the paper, the authors aim to develop a private convolutional deep belief network (pCDBN) based on a CDBN[4]. CDBN is an energy-based model which is a hierarchical generative model scaling to full-sized images[5][6][7]. So the paper requires revisiting some important concepts, including:

- Differential Privacy

- Chebyshev Polynomial

- Restricted Boltzmann Machines

- Convolutional Deep Belief Network

- Deep Private Auto-Encoder Method

- Gibbs sampling

- Functional Mechanism

✨What is the challenge? / 🤔How do they deal with it?

We need to approximate the functions to simplify things. Estimating the lower and upper bounds of the approximation error incurred by applying a particular polynomial in deep neural networks is very challenging. The approximation error bounds must also be independent of the number of data instances to guarantee the ability to be applied in large datasets without consuming much privacy budget. Chebyshev polynomial stands out to solve this problem.

🚀Let’s dive into the approach

Private Convolutional Deep Belief Network

In this section, the approach will be wrapped up. Fig.2 depicts the algorithm of the approached algorithm of pCDBN. This approach does not enforce privacy in max-pooling layers because they use the pooling layers only in the signal filter role.

💥I strongly recommend you to visit section 2 in the paper. So, most of the mathematical equivalents will make more sense.

🦜Please click for the slides

👩💻 I am creating a notebook for this blog post. Please feel free to join me in the learning process (under construction🚧)

Acknowledgements

💐Thank you very much to Helena Barmer for being the best teammate ever.

💐Thank you very much to Emma Bluemke and Nahua Kang for their editorial review.

References

[1] Shokri R, Shmatikov V (2015) Privacy-preserving deep learning. In: CCS’15, pp 1310–1321

[2]Abadi M, Chu A, Goodfellow I, McMahan HB, Mironov I, Talwar K, Zhang L (2016) Deep learning with differential privacy. arXiv:160700133

[3] Phan N, Wang Y, Wu X, Dou D (2016) Differential privacy preservation for deep auto-encoders: an application of human behavior prediction. In: AAAI’16, pp 1309–1316

[5]Energy-Based Models. Available: https://cs.nyu.edu/~yann/research/ebm/

[6]Preserving Differential Privacy in Convolutional Deep Belief Networks, Available:https://arxiv.org/pdf/1706.08839v2.pdf

[7]A Tutorial on Energy-Based Learning, Available:http://yann.lecun.com/exdb/publis/pdf/lecun-06.pdf

[8] Zhu, T., Li, G., Zhou, W., Yu, P.S., Differential Privacy and Applications,Springer,2017.